I am a 5th year PhD candidate at NYU's CILVR Lab and Center for Data Science. My research largely focuses on better understanding the reinforcement learning (RL) framework, and developing better RL algorithms.

A few questions I've been thinking about:

- How to build agents that efficiently explore and autonomously discover a useful model of the world?

- How to design efficient and scalable RL algorithms with a minimal bag of tricks?

- How foundational models can be leveraged to discover what we do not know?

I completed my Master's at Mila / McGill University, co-advised by Prof. Joelle Pineau and Prof. Blake Richards. My master's thesis introduces new ways of decomposing the value function in RL for more efficient learning. This also relates to neuroscientific theories of how the hippocampus works.

I was fortunate to work with a number researchers in neuroscience and psychiatry throughout my undergraduate studies: Dr. Yannis Trakadis in psychiatric genomics, Prof. Mallar Chakravarty in computational neuroscience and neuroimaging, and Prof. Karl Friston in theoretical neuroscience. These experiences gave me an appreciation for the tools, knowledge, and perspectives in the neural-sciences.

Education

New York University

Ph.D. in Data Science

Advisor: Rob Fergus, Rajesh Ranganath

Mila / McGill University

M.Sc. in Computer Science

Advisor: Joelle Pineau, Blake Richards

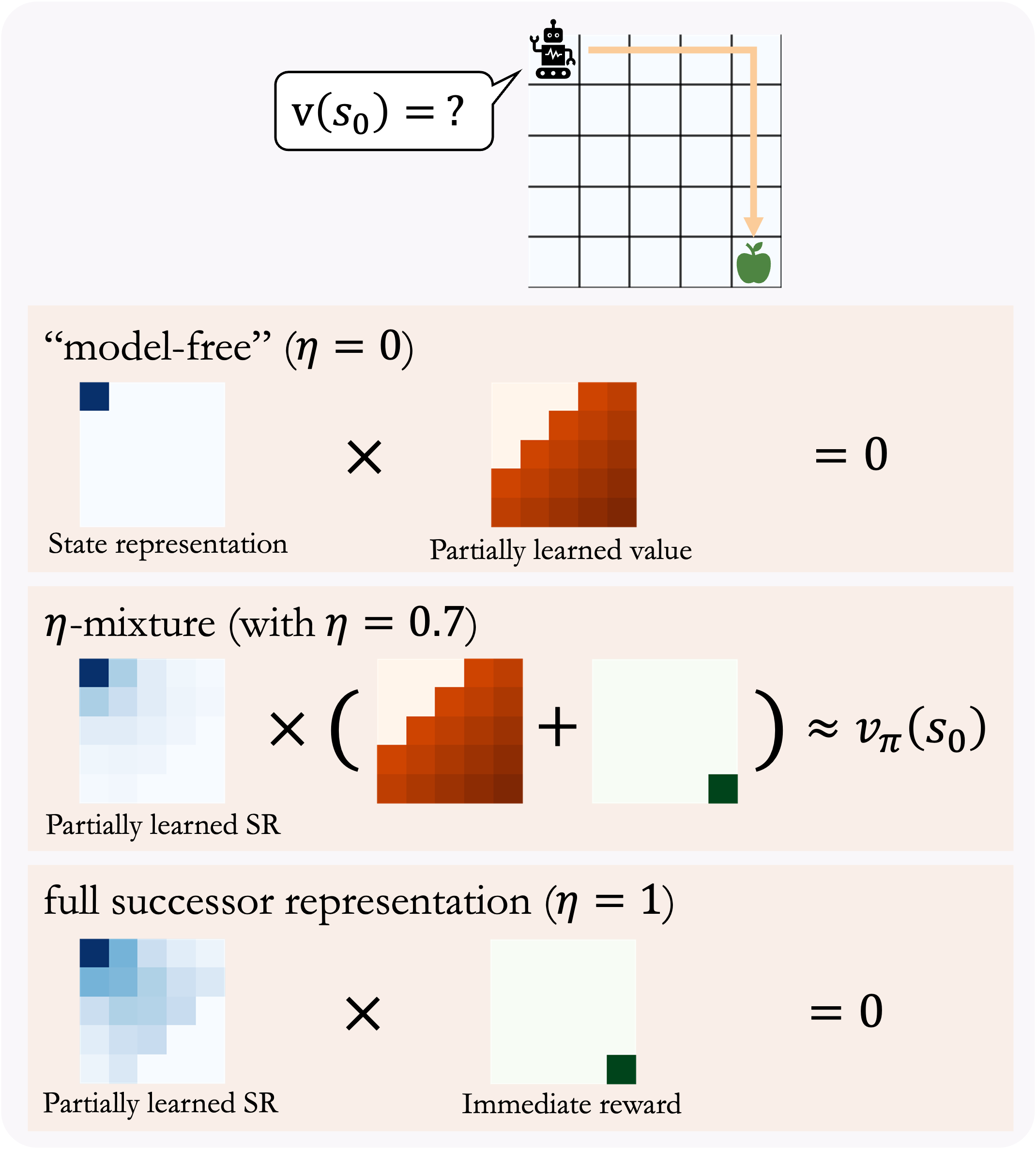

Thesis: On Successor Representations for value learning: efficient credit assignment through implicit models

McGill University

B.Sc. in Neuroscience

Publications

NeurIPS Workshop on Foundations of Reasoning in Language Models 2025 (accepted). Under submission 2025

KL-Regularized Reinforcement Learning is Designed to Mode Collapse

Anthony GX-Chen, Jatin Prakash, Jeff Guo, Rob Fergus, Rajesh Ranganath

We leverage equivalency between RL and distribution matching to prove KL-regularized RL naturally leads to mode-collapsed optimal policy distributions. We then introduce a simple, principled algorithm that explicitly learns multi-modal policies, by matching to a better target distribution. This improves quality and diversity across KL-regularized RL settings — from large language models to drug discovery.

Conference on Language Modelling (COLM) 2025

Language Agents Mirror Human Causal Reasoning Biases. How Can We Help Them Think Like Scientists?

Anthony GX-Chen, Dongyan Lin*, Mandana Samiei*, Doina Precup, Blake Richards, Rob Fergus, Kenneth Marino

Language model (LM) agents exhibit human-like biases when causally exploring. We compare this to human data. We also develop a scalable test-time sampling algorithm to fix this, by sampling hypotheses as code and acting to eliminate them.

ICLR 2025

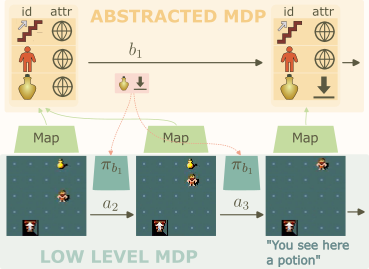

Efficient Exploration and Discriminative World Model Learning with an Object-Centric Abstraction

Anthony GX-Chen, Kenneth Marino, Rob Fergus

Hierarchical reinforcement learning + object centric abstraction + discriminative world model learning. It explore efficiently, plans over long horizons, rapidly solves single tasks, and transfers to different item types and environments.

NeurIPS Workshop on Intrinsically Motivated Open-ended Learning 2024

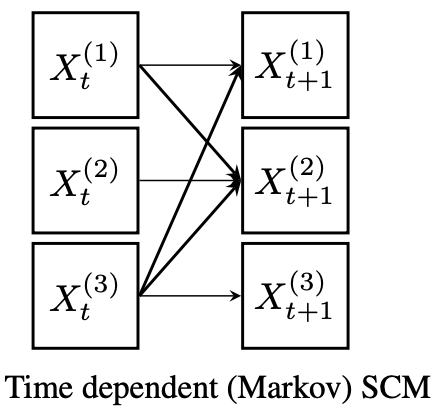

Testing Causal Hypotheses through Hierarchical Reinforcement Learning

Anthony GX-Chen*, Dongyan Lin*, Mandana Samiei*

A framework to think about structural causal models (SCMs) and Markov Decision Processes (MDPs) together, for agentic systems that can test their own causal hypotheses.

Reinforcement Learning Conference (RLC) 2024

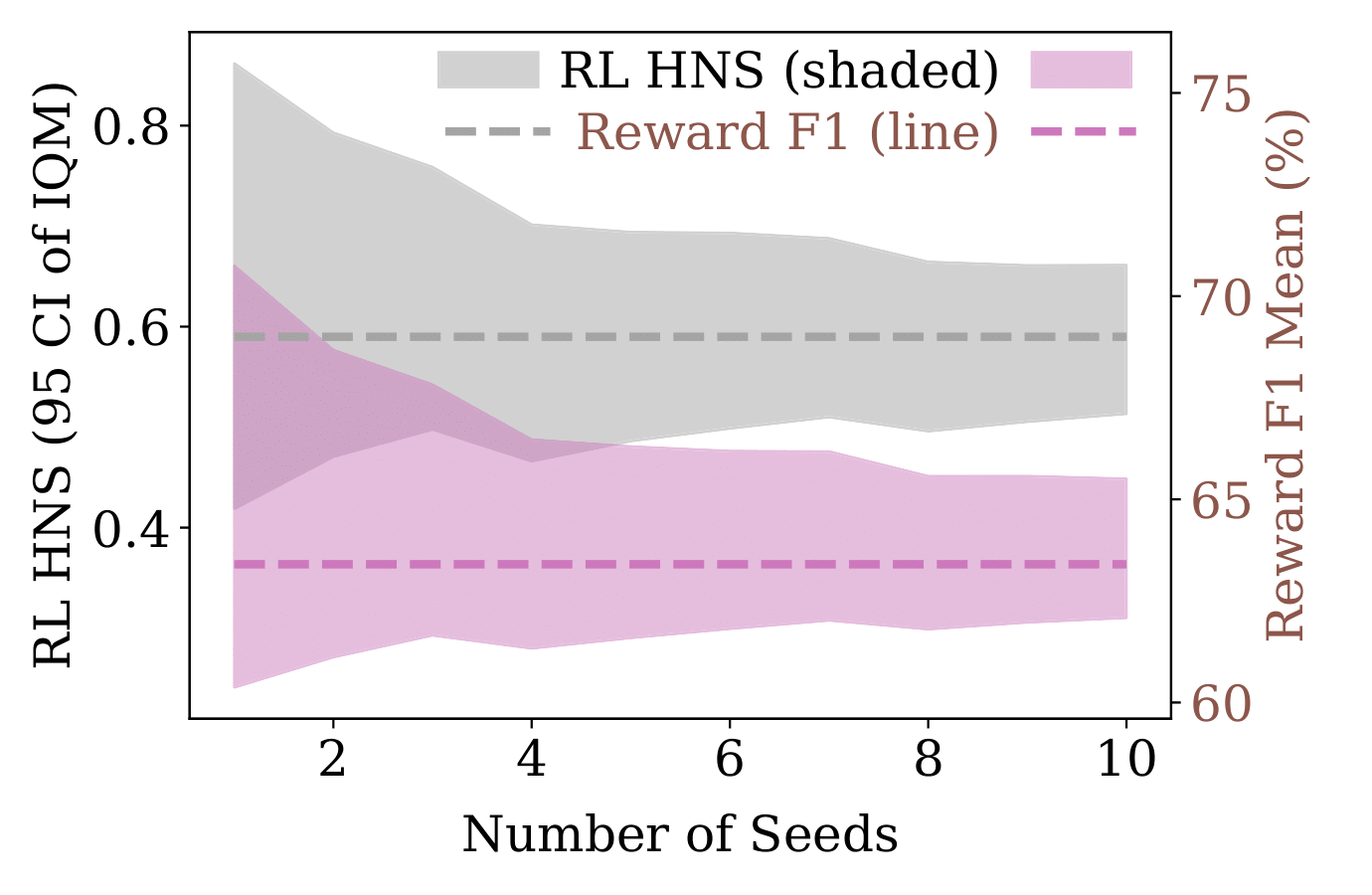

Light-weight probing of unsupervised representations for reinforcement learning

Wancong Zhang, Anthony GX-Chen, Vlad Sobal, Yann LeCun, Nicolas Carion

We investigate different design choices that makes unsupervised representation learning work for reinforcement learning, and design a computationally efficient linear probe that correlate strongly with eventual downstream RL performance.

AAAI 2022

A Generalized Bootstrap Target for Value-Learning, Efficiently Combining Value and Feature Predictions

Anthony GX-Chen, Veronica Chelu, Blake Richards, Joelle Pineau

A new way of constructing more efficient one-step bootstrapped learning targets, by combining value estimates (reward prediction) and successor features (feature prediction) in a complementary way. This is a generalization of TD(0), and leads to a spectrum of one-step learning targets trading off value vs. feature predictions.

NeurIPS Workshop in Biological and Artificial Reinforcement Learning 2020

Lambda Successor Return Error

Anthony GX-Chen, Veronica Chelu, Blake Richards, Joelle Pineau

We show the value prediction error from lambda-return can be factorized into one-step temporal difference (TD) errors and a successor-like representation (SR). This leads to a new algorithm using SR for error assignment. We show in a tabular setting this results in faster value function learning as compared to both the lambda-return, as well as the (original) SR. We further discuss this perspective in light of the recent neuroscience hypothesis of the brain using successor-like representations.

Frontiers in Artificial Intelligence 2020

A Bayesian Account of Generalist and Specialist Formation Under the Active Inference Framework

Anthony GX-Chen, David Benrimoh, Thomas Parr, Karl J. Friston

We model animal / human behaviour using a variational Bayesian (Active Inference) framework. Specifically, we propose how the priors over an agent's policy space can be learned as a result of experience, and how this leads to the phenomenon of specialist and generalist formation. Finally we discuss this in the context of computational psychiatry where symptoms can be explained through faulty inference.

Neuroimage 2020

Investigating microstructural variation in the human hippocampus using non-negative matrix factorization

Raihaan Patel, Christopher J Steele, Anthony GX-Chen, Sejal Patel, Gabriel A Devenyi, Jürgen Germann, Christine L Tardif, M Mallar Chakravarty

Using non-negative matrix factorization to discover interpretable components of the human hippocampus from neuroimaging data.

American Journal of Medical Genetics Part B: Neuropsychiatric Genetics 2019

Machine learning in schizophrenia genomics, a case‐control study using 5,090 exomes

Yannis J Trakadis, Sameer Sardaar, Anthony GX-Chen, Vanessa Fulginiti, Ankur Krishnan

Applying machine learning to genetic data for high-accuracy schizophrenia risk prediction and gene feature analysis.